StarCluster is developed at the Massachusetts Institute of Technology for scientific HPC computing on Amazon Web Services (AWS). It supports building HPC clusters based on the Open Grid Scheduler, previously Sun Grid Engine, or HTCondor, a high throughput computing environment previously known as Condor.

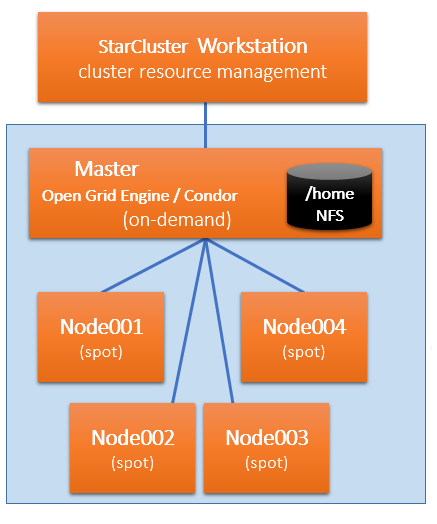

StarCluster offers Python-based tools for the authentication, node management, elastic auto scaling and monitoring of HPC clusters. An EBS drive shared with worker nodes through NFS provides data persistence within clusters on Amazon EFS or other Amazon storage services such as S3, RedShift or DynamoDB (See the picture below).

Setting up computational jobs to StarCluster is easy. Any pre-configured Ubuntu or Red Hat Amazon Machine Image (AMI) can be used as an HPC cluster node. StarCluster is easily configurable and extendable via plugins, making it possible to custom configure HPC cluster nodes at boot time; install packages remotely, and generate setup and configuration files. One of our team members has designed and is participating in building such extension for D-MASON, a toolkit for distributed multiagent simulations.

It is also worth noting that StarCluster has a properly configured security: An entire cluster runs within a single Amazon Security Group and every node in the cluster is accessible by SSH private key authorization.

StarCluster supports running large computational jobs on spot instances with much lower computing costs than on-demand instances. For one of our customers we built an extension to the StarCluster auto scaler for auto scaling the HPC with spot instances that integrates with our Bid Server.